In my previous post I introduced some of the peculiarities of designing SharePoint 2010 environments for Amazon’s EC2, specifically focused on the AWS platform, storage, snapshots and provisioning. In this post I continue this exploration, moving on to cloning and networking considerations.

Other posts in this series

- SharePoint 2010 Infrastructure for Amazon EC2 Part I: Storage and Provisioning

- SharePoint 2010 Infrastructure for Amazon EC2 Part II: Cloning and Networking

- SharePoint 2010 Infrastructure for Amazon EC2 Part III: Administration, Delegation and Licensing

- SharePoint 2010 Infrastructure for Amazon EC2 Part IV: Cost Analysis

- Amazon VPC and VM Import Updates

Machine names, Domain SIDs and Cloning

In our testing, we were able to run multiple instances of the same AMI concurrently, which can be desirable if you have a team of developers with similar or identical requirements. We could run these instances beside each other without conflicts because we had all roles (including the DC/DNS) on one machine and we locked down the firewall, which is advisable anyway in the cloud. We only allowed the RDP port inbound to start with, and opened HTTP/HTTPS traffic where it was helpful to do so. This cloning story would get much more complicated with multiple servers, as I discuss in more detail in the networking section below.

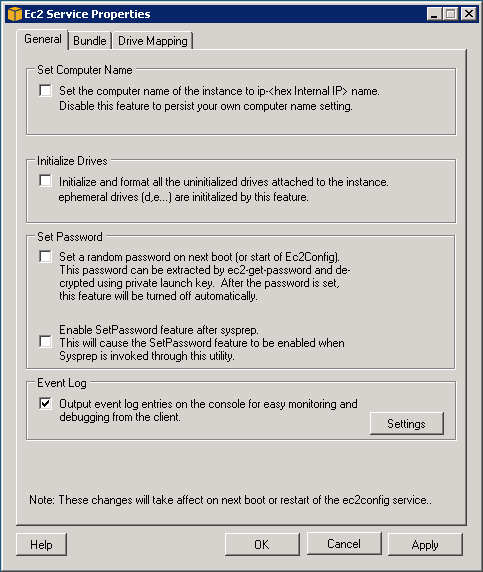

One big “gotcha” in this area is the default settings of the EC2 Service Properties when Amazon’s Windows AMI is launched initially. This is one of the few additions that Amazon packages with their Windows image. In the EC2 Service Properties you should de-select the Set Computer Name and Set Password options. The Set Computer Name option seriously causes problems for SharePoint, as it changes the Machine Name whenever the instance is started up. The good news is that you only need to do this once if you will be creating a new base image. Just be careful not to change this setting back later on.

Networking

By default, Amazon assigns a public IPv4 address to EC2 instances via DHCP. This IP address changes whenever an instance is launched, allowing Amazon to manage their pool of public IPv4 addresses effectively. Until IPv6 adoption ramps up, this is the only viable option for an offering of this scale, although Amazon are actively looking at IPv6 today. By default, Amazon also assigns a private IPv4 address to EC2 instances via DHCP. This internal IP address also changes whenever an instance is launched.

Internal and external dynamic IP addressing introduces considerable design complexity for SharePoint development environments. This complexity is heightened by the addition of Elastic IP Addresses and the Virtual Private Cloud options.

Domain Controllers and Private DHCP

As I’ve mentioned before, SharePoint 2010 development environments need to be members of a domain in order to successfully deploy the Search or User Profile Service Applications, but unfortunately dynamically-assigned IP addresses and domain controllers don’t play nicely together. I shan’t delve in to those details much here, but this has been known to cause problems with start-up times for DCs, and member servers won’t know how to find the DCs once the DC’s IP address changes. Additionally, there are Firewall policy implications.

With the exception of the Virtual Private Cloud (discussed below), we had to rule out persistent multiple-server farms for these reasons. The complexities of managing this stuff on a daily basis would be beyond most users and would probably create system instability or at the very least, add cost (by leaving the DC on all the time). The option of adding a second DC for resilience and to possibly work around some of these issues would add further complexity and cost. Basically, this wasn’t working.

Developing on Domain Controllers

The only viable approach we could find for working with DCs on DHCP was to make the SharePoint development machine the domain controller. This is a step backwards in many ways, as this configuration has been known to cause issues. As I summarised in March 2010 (from the link above):

- Domain Controller security is bad for development. It means developers will be coding as Domain Admins and they will be doing so on a machine with Domain Controller security policies. This is just a mess. It’s tighter than it should be in some respects and looser in others.

- SQL doesn’t like to run on a DC.

- Running a DC, SQL and SharePoint on the same machine creates a massive load of service start-up contention and sometimes the environment will start from an unstable point because dependent services will not be ready when a depending service tries to start.

- This also increases start-up time considerably.

- Adding Visual Studio to this mix causes known performance issues. The machine simply can’t keep up with doing all of this.

Having said all of that a while ago, based on lesser-performing equipment, we didn’t actually find that performance or installation were particularly troublesome on EC2, although we did encounter a security policy issue or two. I still have reservations about the code quality that will emerge from development on a domain controller, but if this is acceptable for your requirements then I think this is the most significant Private DHCP issue conquered. If not, you will probably need to look at the Virtual Private Cloud. Other topologies are conceivable but with even more complexity than we’re already contending with. These are unlikely to be broadly usable.

Public DHCP issues

The primary issue with dynamic public IP addresses is finding out what the new address is. This is easy enough if you have access to the AWS console, as you can pull the new address directly from the instance description and even download a file to launch an RDP connection to the new IP address directly. However, it’s very unlikely that it will be acceptable to give access to the AWS Management Console to all users. This leaves three options, as I see it:

- Leave the machines running 24/7 (at a potentially massive increase in cost).

- Have an administrator send/provide the addresses to users as the instances are started up.

- This feels very clunky to me and untenable in the long term.

- Find a management tool (there are a few) or a scripted approach to handling this scenario.

Whatever the solution, it’s likely to form part of the broader question of administration, management tools and delegation, which I’ll come back to in the next post. I believe this can be solved without too much difficulty, but it requires some thought along these lines in order to avoid a mess.

Elastic IP Addresses

One way that Amazon has tried to ease the pain of Public DHCP is the Elastic IP Address. By default, each customer is given five, although you can request more. Elastic IP addresses are applied to an instance while it’s running. A few minutes after it has been applied it takes over from the DHCP-assigned address and users can access the instance at their usual address. However, this requires intervention by an administrator to associate the Elastic IP Address with the instance after it’s been started. Alternately it can be scripted. Just keep in mind this is another option that probably isn’t best delegated to everyone by giving all users access to the AWS console.

One thing that’s particularly crafty about Elastic IP Addresses is that you are charged $.01 for each hour they are not in use. If you’re diligent about turning off your machines when you’re not using them, you will get nailed for not using the IP address. Granted, it’s a small charge and with IPv4 address supplies dwindling very quickly, perhaps not that unreasonable.

In my view, Elastic IP Addresses probably aren’t going to solve a lot of problems, but in some cases it may make things easier – particularly if pointing DNS at these addresses.

Virtual Private Cloud

Update 17 March 2011: the information regarding the public IP addresses and the VPC below is now out of date. Please see my follow-up post on Amazon VPC and VM Import Updates for more information.

The Virtual Private Cloud (VPC) is effectively a VPN connection between your network and AWS. It allows fixed private IP addresses, DHCP options like DNS/WINS servers, and allows you to connect existing assets to the cloud, for instance management or backup servers. This may also help with SSO. I shan’t belabour the design options for the VPC, because at face value it should be pretty obvious if it’s the right fit for your uses. There are obvious security considerations about opening up this communication across the WAN to a third-party, but that’s not to say there aren’t ways it can be set up well – for instance creating a dedicated domain in the VPC.

The most important thing to know about the VPC is that when instances are launched they only get a NIC with an IP address on a VPC subnet. There is no public IP address for the instance. This means the only way you can access the instance is via the other end of the VPN (typically the corporate network). This may introduce some funky routing and potentially degrade speed/reliability for users working from home or on client sites. On the other hand, it may not. It’s critical that this option is thought through with a broad design team including internal network and systems teams. I would highly recommend testing/piloting this configuration as well (noting that the initial configuration may be expensive for a test, since it will integrate with production infrastructure).

I think the VPC can answer a lot of the shortcomings of the standard EC2 IP addressing approach if public IP addressing is not a requirement. I’m not sure why NAT couldn’t have been used to allocate fixed internal addresses by default, but it hasn’t been, so we’ve only got one way in to the VPC. Once in it, you can deploy single-server machines as we did without the VPC (assuming the firewall is locked down in the same way), or join SysPrep’d SharePoint servers to a shared domain infrastructure. This assumes SharePoint provisioning (scripting installation/configuration) is mature enough that manual configuration steps don’t impede productivity. Other topologies may be valid as well. In principal it shouldn’t be miles different from your LAN. The main things to understand is that the private IP addresses are assigned by Amazon and there is just the one way in. Note: there’s quite a bit to understand about planning the VPC itself, and pricing for that traffic, which is all outside of the scope of what I’m inspecting here, so please refer to the Amazon VPC resources for more information. Also be aware that it’s still in Beta.

Networking can be enough to melt anyone’s brain, so I’ll save administration, delegation and licensing until my next post.

Concerning DCs on DHCP: Run DNS on the DC. Give the DC an elastic IP and open DNS port to 0.0.0.0. Point all your instances to the elastic IP to get their DNS. Then, have your DC’s register their private IP’s into the DNS. As the private IP’s change, they’ll get updated in the DNS and distributed out to all of the instances via the static elastic IP. Thus no need to run DC’s on the sharepoint instances. – [email protected]

Hi Kevin,

Thanks for this. Good idea. Out of curiosity have you tried this configuration on EC2? Do the DCs boot in decent time if they are getting an elastic IP address part of the way through the boot process? Or do you script that? And is there any lag in re-establishing communication with the DC after the elastic IP is assigned? Also, I’m assuming the VPC is a requirement for this configuration, in order to set up the private IP ranges. Is that right?

Cheers,

Tristan