The IIS.NET Search Engine Optimization (SEO) Toolkit provides a powerful analysis tool that can generate reports for web editors and can automatically generate sitemaps and robots.txt files as well. These reports not only provide insight in to page rank improvements but also help content editors identify missing/duplicate content and find broken links. This post provides an overview of how the tools can be used by content editors or web managers who do not have access to the server infrastructure and what you can expect to see when running an SEO Analysis against an out of the box SharePoint 2010 Publishing site. I will also review the server tools that generate sitemaps and robots.txt files.

Installing the SEO Toolkit

Although Remote Server Administration Tools can be installed on Windows Vista and Windows 7, I have produced the directions below on my Windows Server 2008 R2 desktop. The instructions should be fundamentally the same for any OS once IIS Manager is available locally, however it is installed. To be crystal clear, the SEO Toolkit can be used by anyone with Windows Vista, Windows 7, Windows Server 2008 or Windows Server 2008 R2. It is not a requirement to have access to the web server and it is not necessary to install IIS locally.

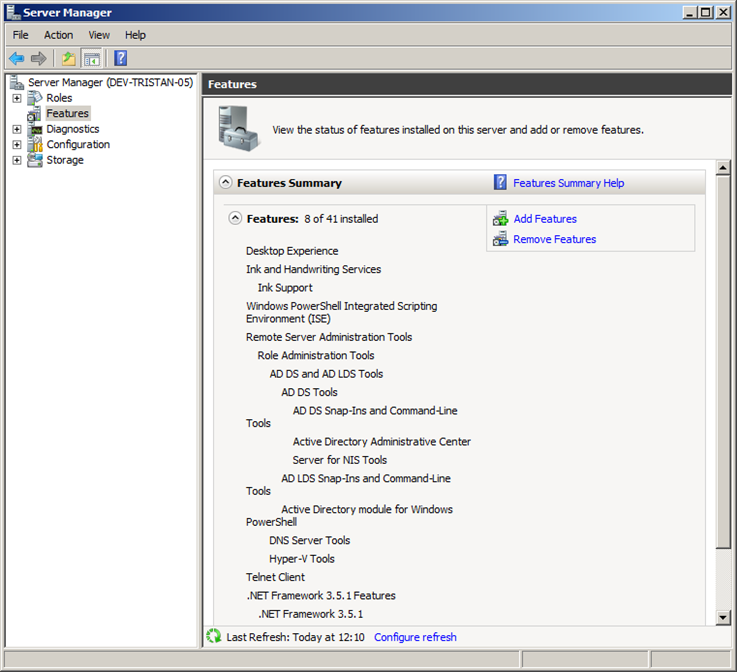

On Windows Server 2008 and 2008 R2 the IIS Manager Feature can be added through Server Manager, even if the IIS Server Role is not installed.

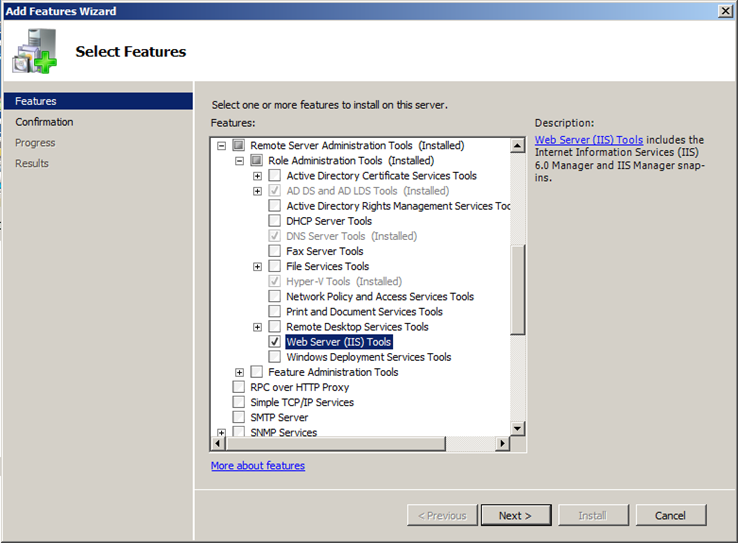

Expand the Remote Server Administration Tools node and select the Web Server (IIS) Tools node.

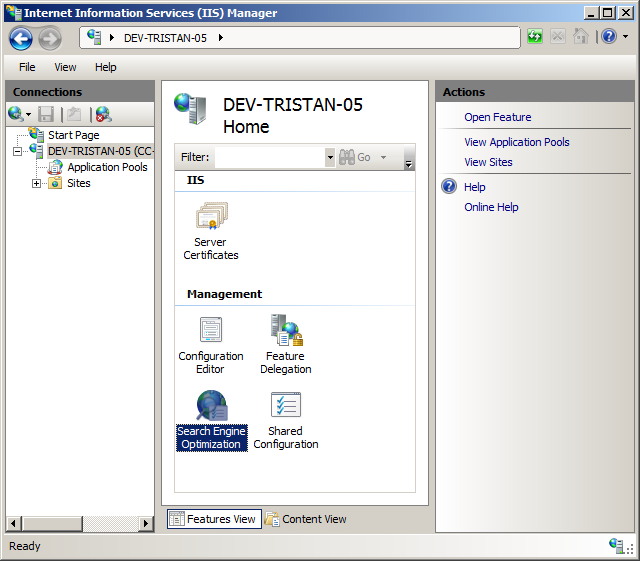

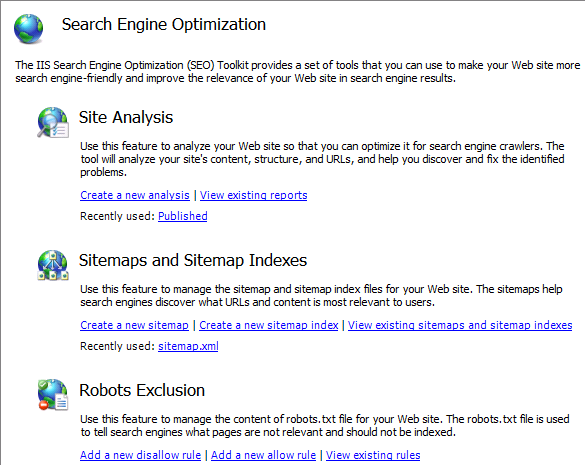

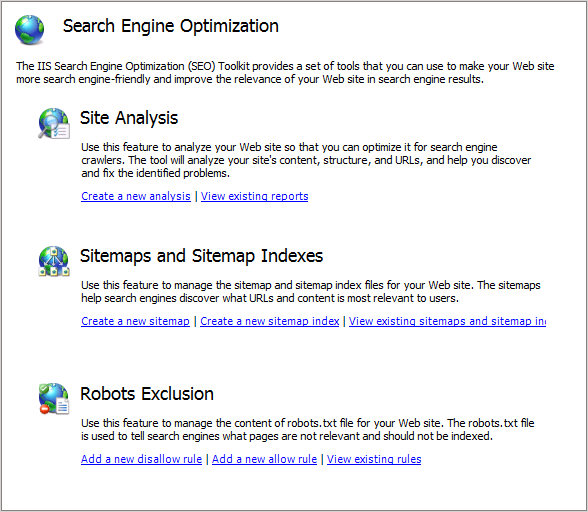

Click Next and Install. Wait for the Add Features Wizard to complete and then download and install the SEO Toolkit. IIS Manager is available in the Administrative Tools menu and should look something like this when you click on your local machine’s connection in the left-hand pane.

The SEO Toolkit home page looks like this.

I shan’t go over everything here because there is an excellent three minute video on the SEO Toolkit home page (linked above), which details the basic functionality of the tool.

Analysing a SharePoint 2010 Publishing site

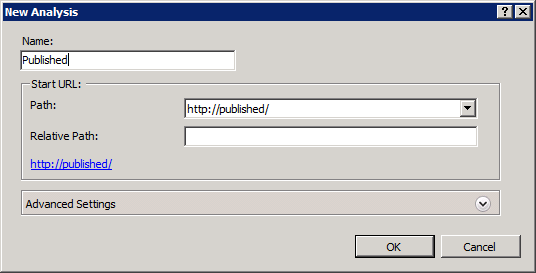

Click the first link on the SEO Toolkit landing page and Create a new analysis of your site.

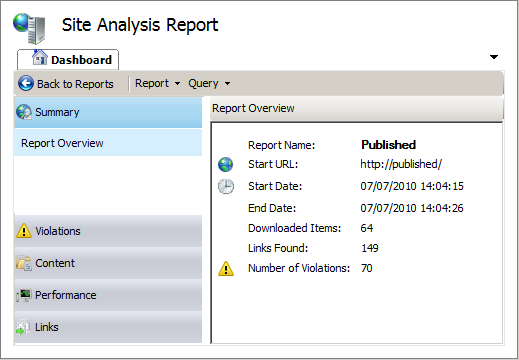

The analysis takes you directly to the Site Analysis Report.

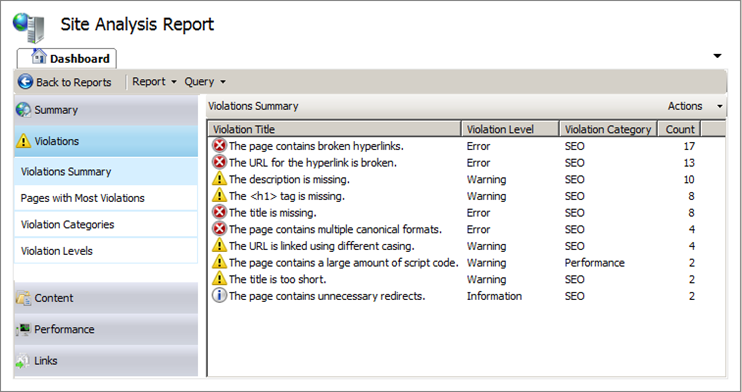

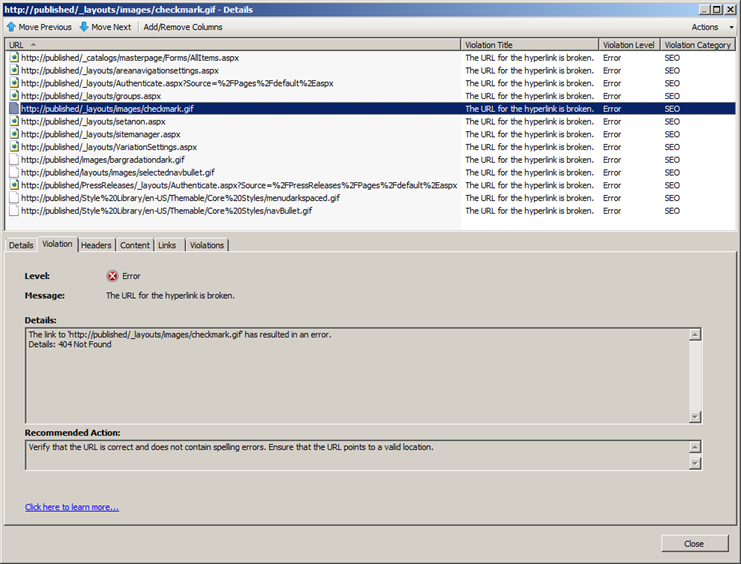

Clearly, the Violations are of interest. What sort of things do they tell us? I’ll look at the errors first.

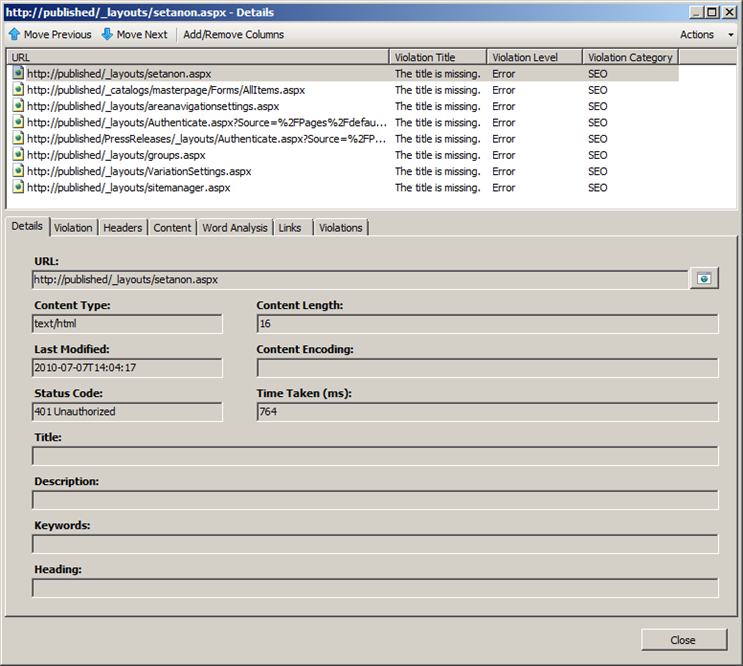

Drilling in to The title is missing, we find that the five pages are links to authenticated content on the out of the box Publishing site template’s home page, which are 401 unauthenticated errors in this case, since this is an anonymous access zone.

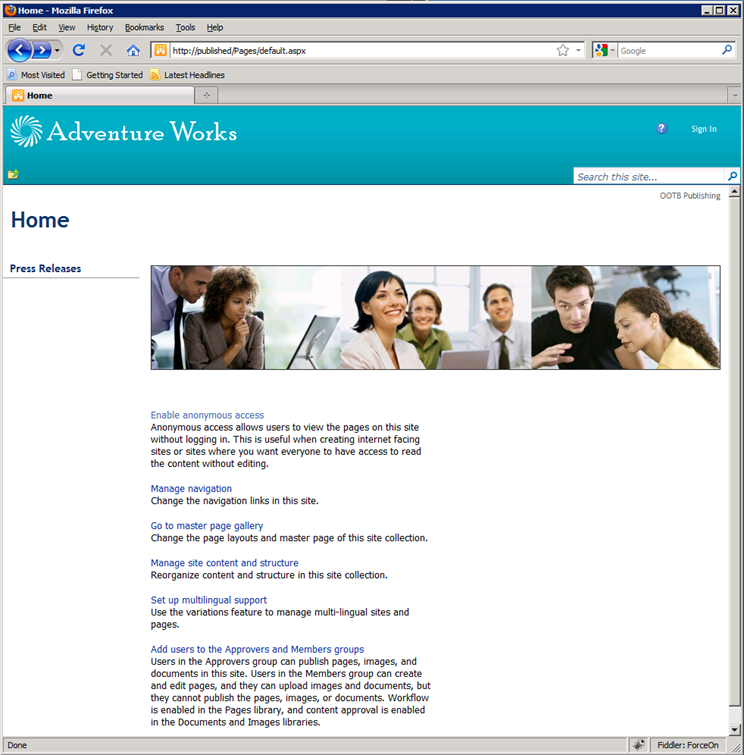

The Publishing Portal home page, which includes links to authenticated content

So these errors are unsurprising, now that we know what they are. If this were real content, ideally we would modify it to remove these links to authenticated pages.

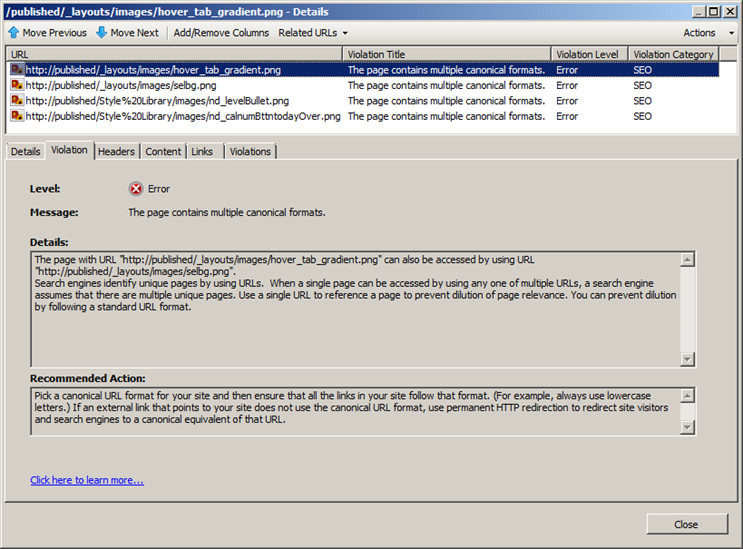

So what about that Canonical Formats message?

This violation tells us that a single object can be accessed using two different links. In this case we have two sets of duplicated images. The first two .png files are transparent spacers and the second two are orange.

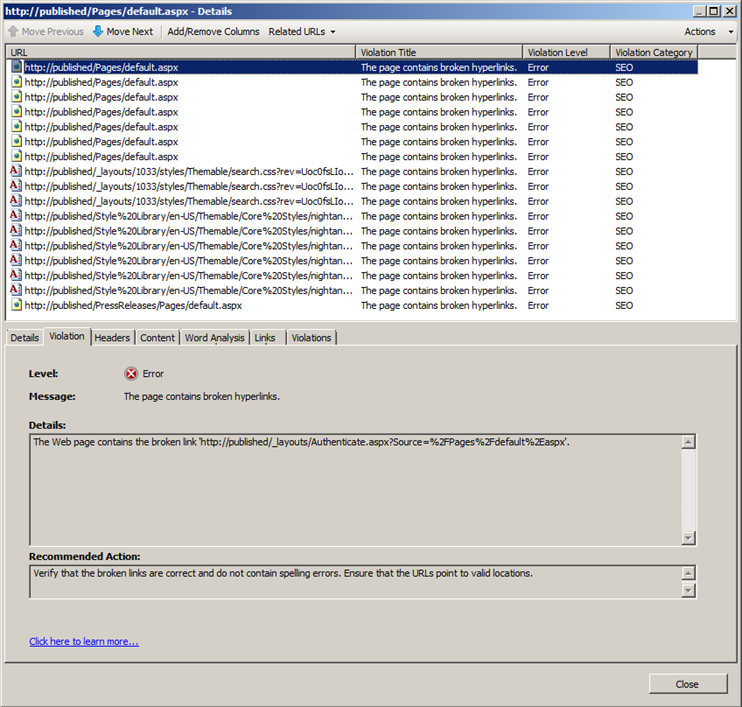

That’s precisely the sort of thing we would hope to find out about and correct. So what about “The page contains broken hyperlinks”?

Again, all of these links are broken because they point to authenticated content. It’s the same story for “The URL for the hyperlink is broken”, except for the five .gif files that appear there.

In this case the images are actually missing. Again, this is exactly the sort of thing we want to know.

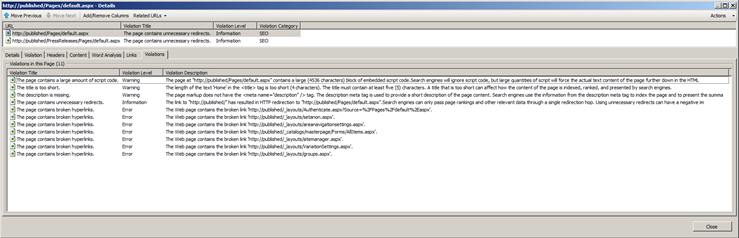

Unsurprisingly all of these errors point out fundamental problems in the site which content owners would want to correct even if they were unconcerned with page rank. The warnings, which I skipped over earlier, provide supplementary insight in to changes that can improve page rank in an otherwise functionally-correct website. Rather than discussing those individually, I’ll just include a screen shot of the Violations tab in the bottom half of the details pane. This tab summarises all of the violations on a selected page, which will improve the editor’s experience when making these changes.

What’s particularly useful about this view is that it is now enumerating each page that violates the specified rule in the top pane, but the Violations tab enumerates all of the violations for the selected object in the top pane (the default home page in this instance).

Some of the other dashboards reveal slow-performing pages, most linked pages, redirects, pages blocked by robots.txt, a status code summary, a list of external links and more. It’s a very useful set of tools. If this has been at all interesting, it’s definitely worth reviewing the video and other resources up at IIS.NET.

Server tools

Everything that I’ve discussed so far can be run against any site that the SEO Toolkit user can browse to. Server access is completely unnecessary. However, there are two added tools that have to be run on the server. This should not be hugely problematic for the content owner, as these tools need to be updated relatively infrequently once they’ve been set up initially.

Creating a Sitemap

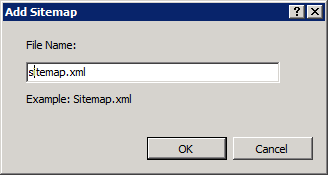

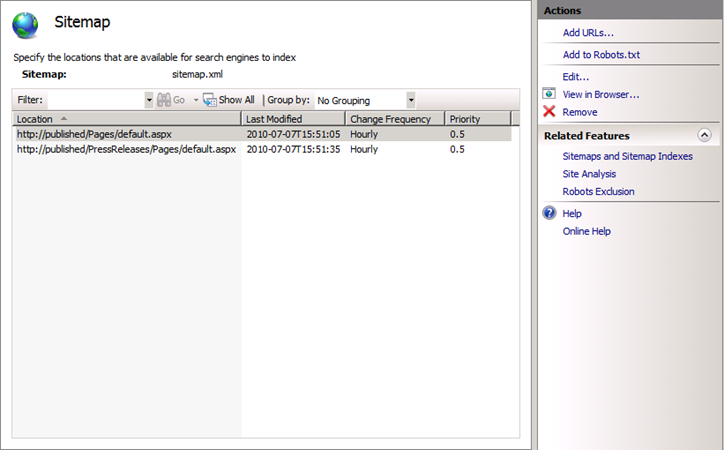

Hopping on to my server, I click the Create a new sitemap link and specify the Sitemap file name.

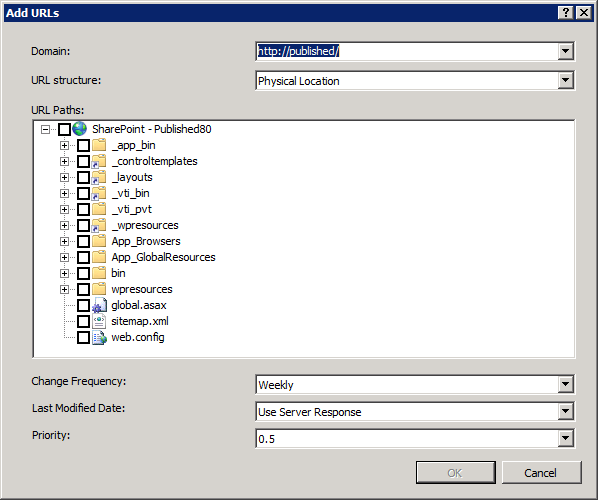

This is my favourite bit. When the Add URLs dialogue first launches, it displays the IIS site files in inetpub.

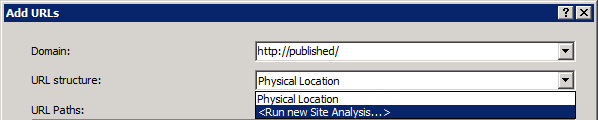

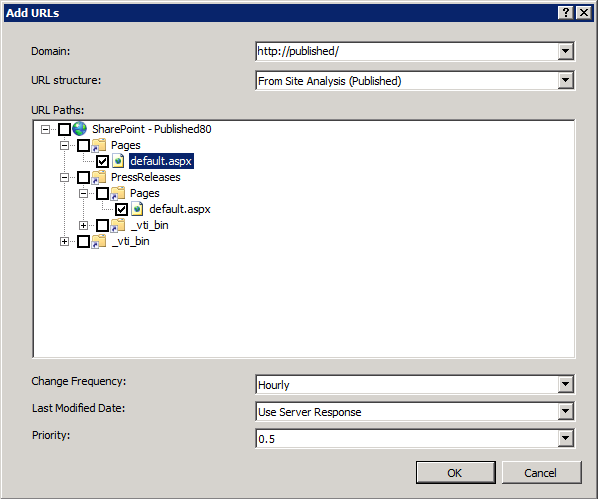

Not very useful for a SharePoint site, is it? But if we Run new Site Analysis from the URL structure drop-down…

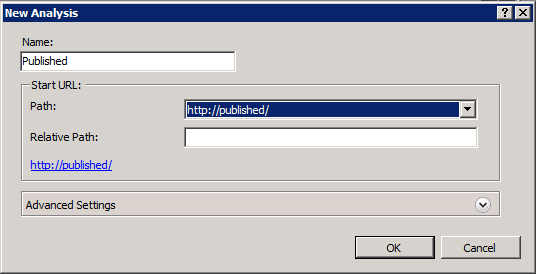

The New Analysis box pops up.

After about 40 seconds of analysis (in my environment) we get the SharePoint site map!

At the bottom of the dialogue the Change Frequency tells search engines how often pages are likely to change. The priority details how important we consider our site to be relative to other URLs on our site (I must confess I don’t fully understand how this works, but I’m not responsible for content. 🙂). You can also tell Search Engines how to identify the Last Modified Date. More information on all of this can be found on TechNet.

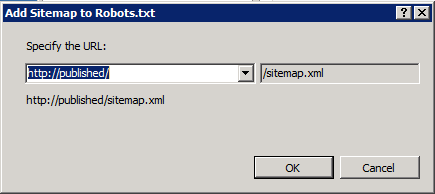

One last thing before moving on. We need to add the Sitemap to our robots.txt file. There’s a handy link in the Actions pane to do so.

Also note, in the Related Features on the Actions pane, there’s a link to Robots Exclusion, which brings us to the final tool.

Robots.txt file management

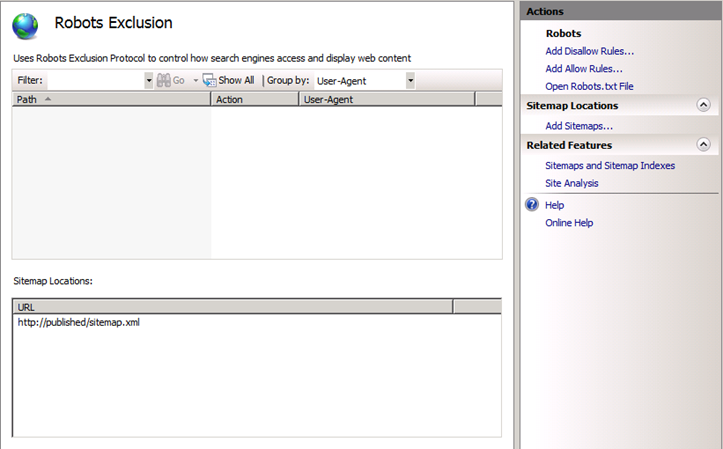

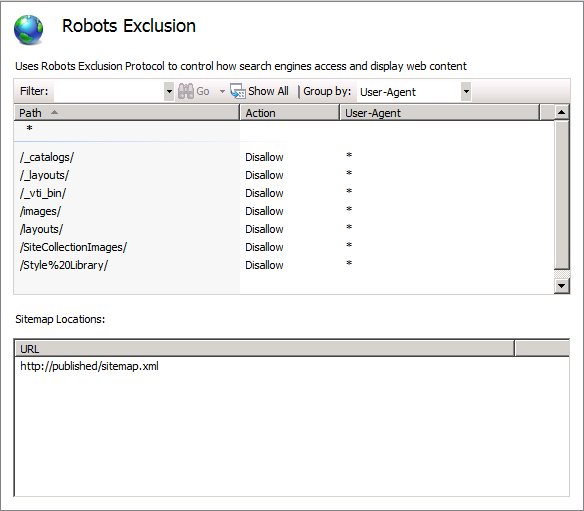

If we click the Robots Exclusion link or the View existing rules link from the SEO Toolkit landing page, we can see that our sitemap.xml file is being referenced, as added above.

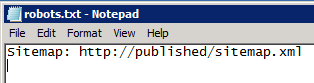

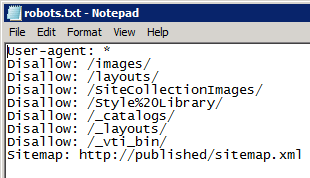

This is confirmed if I click the Open Robots.txt link in the Actions pane.

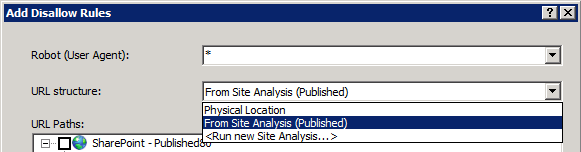

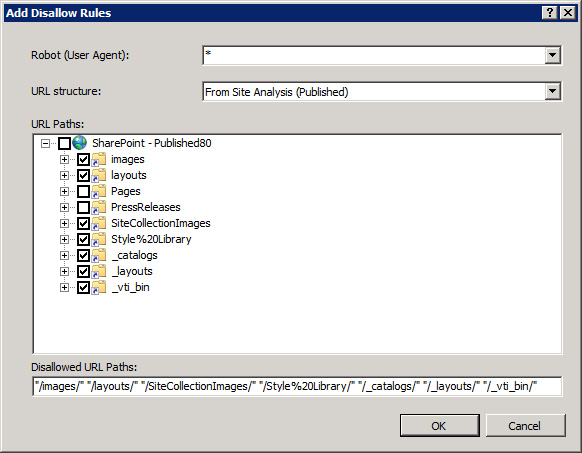

If we click Add Disallow Rules or Add Allow Rules we get a similar dialogue, and in both cases we will want to specify our previous Site Analysis for the URL structure.

Now if I want to exclude all of the content that I don’t want to index I can just tick the appropriate boxes from my last analysis (note that this looks different than the selectable options from the Sitemap dialogue).

Voila! Paths are excluded and the robots.txt file is updated.

One thing to note is that the robots.txt file did not appear for me during testing until after I stopped and started the website in IIS. This is only an issue when it’s created for the first time, but worth noting. I believe this is also true for the sitemap.xml. file.

So… these are good tools! Once configured, the robots.txt shouldn’t need to be updated often and the web managers should become aware of any problems soon after they occur through their own use of the reporting tool. In short, these tools devolve a great deal of control and insight and there seems to be very little reason not to use them.

We have also experimented with generating the server side outputs using PowerShell, which a colleague of mine will detail soon and I will post here when ready. If there is any reluctance to use this IIS.NET extension in production infrastructure, a combination of PowerShell for file generation/management and the SEO Toolkit for reporting may be a sensible solution.

I’d heard about this tool but not yet used it. What a fantastic piece of kit!

Thank you for the insight. The demand for sharepoint seo is becoming more and more as the use of SharePoint frontend websites in becoming more popular.

Can this be done in SharePoint 2013?

I haven’t tried, but it’s an IIS thing rather than a SharePoint thing, so issues may be more likely to emerge because of an IIS version change than because of SharePoint. I’d give it a go. It doesn’t take long to try it out.

I am inn ffact thankful to the holder of this website who has shared thhis impressive post at at this place.

What’s up, everything is going perfectly here and ofcourse every one is sharing data, that’s in fact good,

keep up writing.